Researchers from UC Berkeley and ETH Zurich have demonstrated how OpenAI’s GPT-4-powered language model can be applied in robotics to teach budget-friendly robot arms to perform real-world tasks, like cleaning up spills.

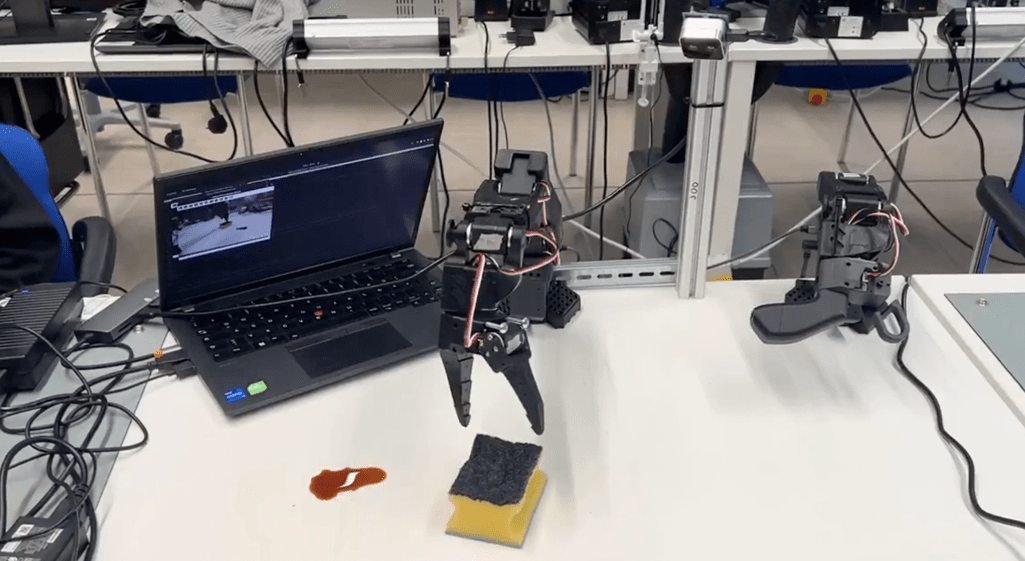

In a video shared by Jannik Grothusen, a roboticist from UC Berkeley, a robot arm is seen identifying a sponge and a spill in front of it, responding in fluent English with, “I see a sponge and a small spill on a surface.” When instructed to clean the table, the robot explains its plan, saying, “I’ll use the robot arm to clean the table surface. First, I’ll check for available motion skills to pick up the sponge and wipe the table, then I’ll execute the sequence to clean the spill.” The robot then smoothly picks up the sponge and proceeds to clean it, just as promised.

The project required only four days to build and a $250 investment in open-source robot arms, demonstrating the increasing accessibility of robotics. According to Grothusen, the robot’s actions were guided by a framework called LangChain—a “multimode agent” that translated GPT-4’s language input into actionable robot movements through reinforcement training.

In a LinkedIn post, Grothusen described the experiment as a “proof-of-concept for a robot control architecture,” demonstrating how visual language models can facilitate human-robot interaction, reasoning, and task orchestration. This achievement shows the transformative potential of open-source robotics combined with advanced AI language models.

Although it remains to be seen if this concept will evolve into fully functional household cleaning robots, the project serves as a promising foundation for future developments in affordable, practical robotics.