Anthropic, an AI research and development company, is advancing its AI technology with the release of Claude 3.5 Sonnet. This upgraded model can now control desktop applications with its new “Computer Use” API, which allows it to interact with software in a way that mimics human users.

Anthropic’s vision for Claude 3.5 Sonnet dates back to a pitch made to investors, where the company outlined its goal to create AI capable of automating research, emails, and other back-office tasks. They referred to this concept as a “next-gen algorithm for AI self-teaching,” which they believe could one day automate large segments of the economy. With the release of Claude 3.5 Sonnet, that vision is beginning to take shape.

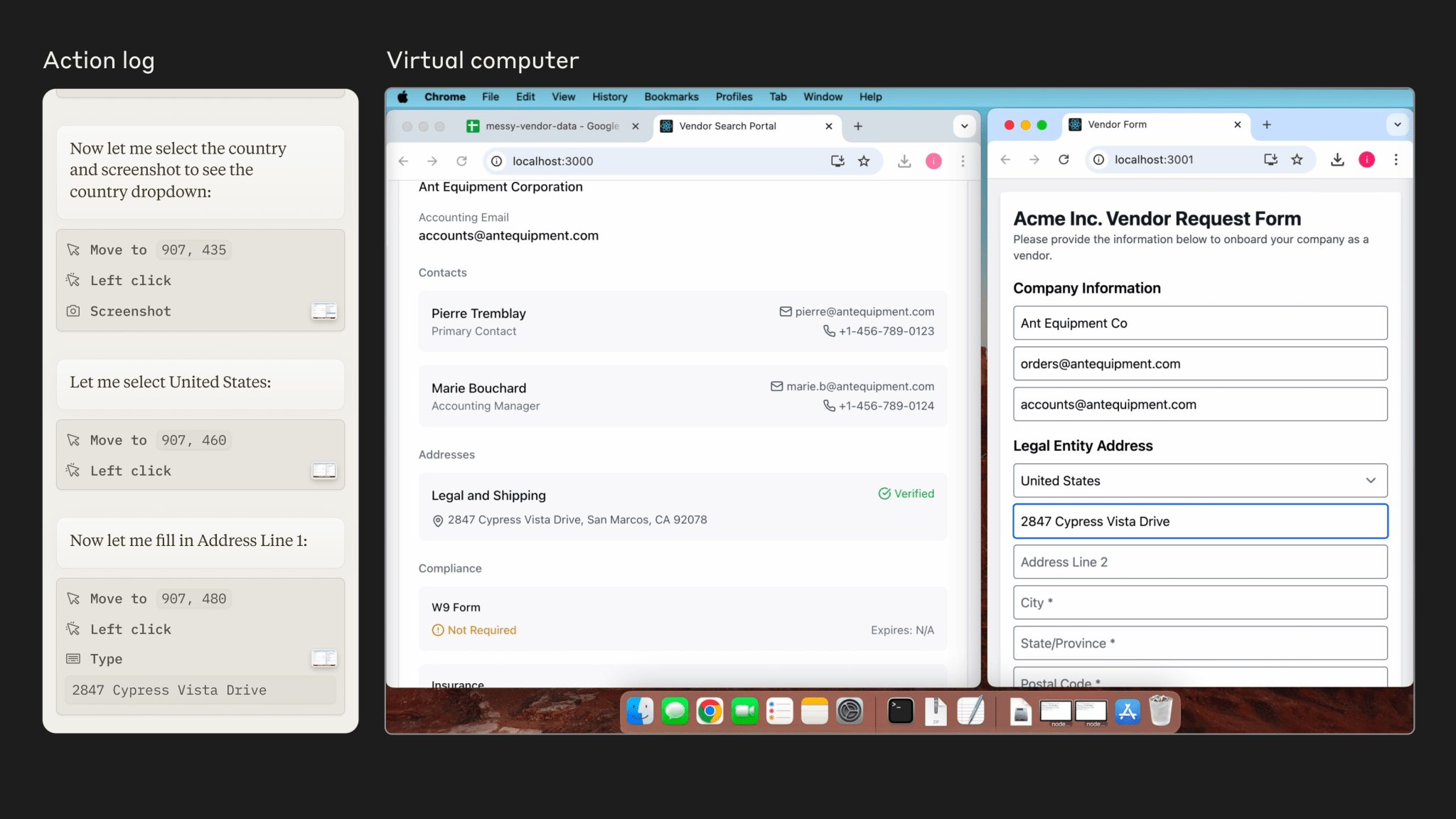

On Tuesday, Anthropic introduced the upgraded model with a groundbreaking feature: the ability to control any desktop app using a new “Computer Use” API. This API, currently in open beta, allows Claude to perform tasks such as clicking buttons, moving cursors, and interacting with software just like a human operator. Anthropic explained, “We trained Claude to see what’s happening on a screen and then use the software tools available to carry out tasks.”

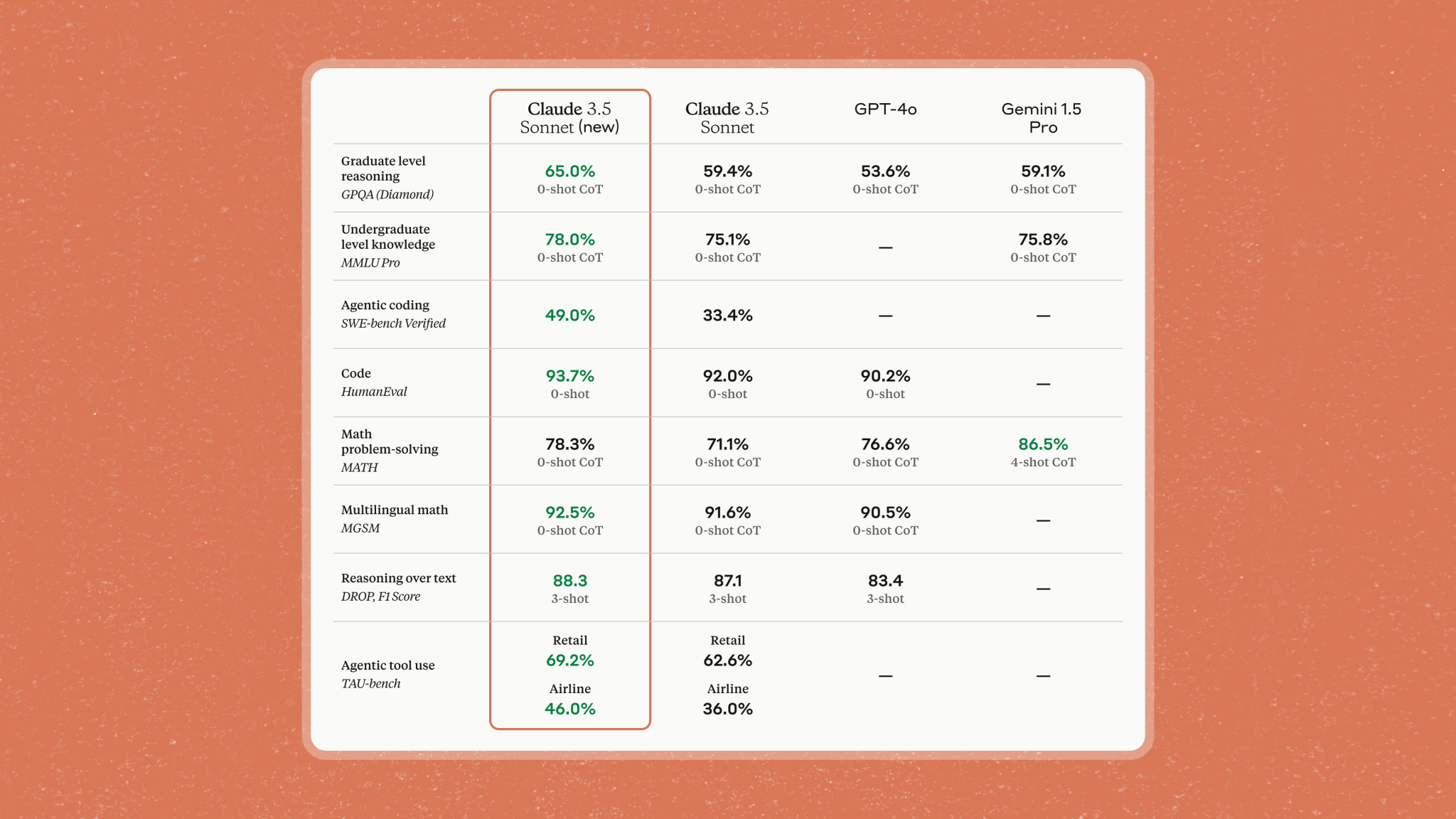

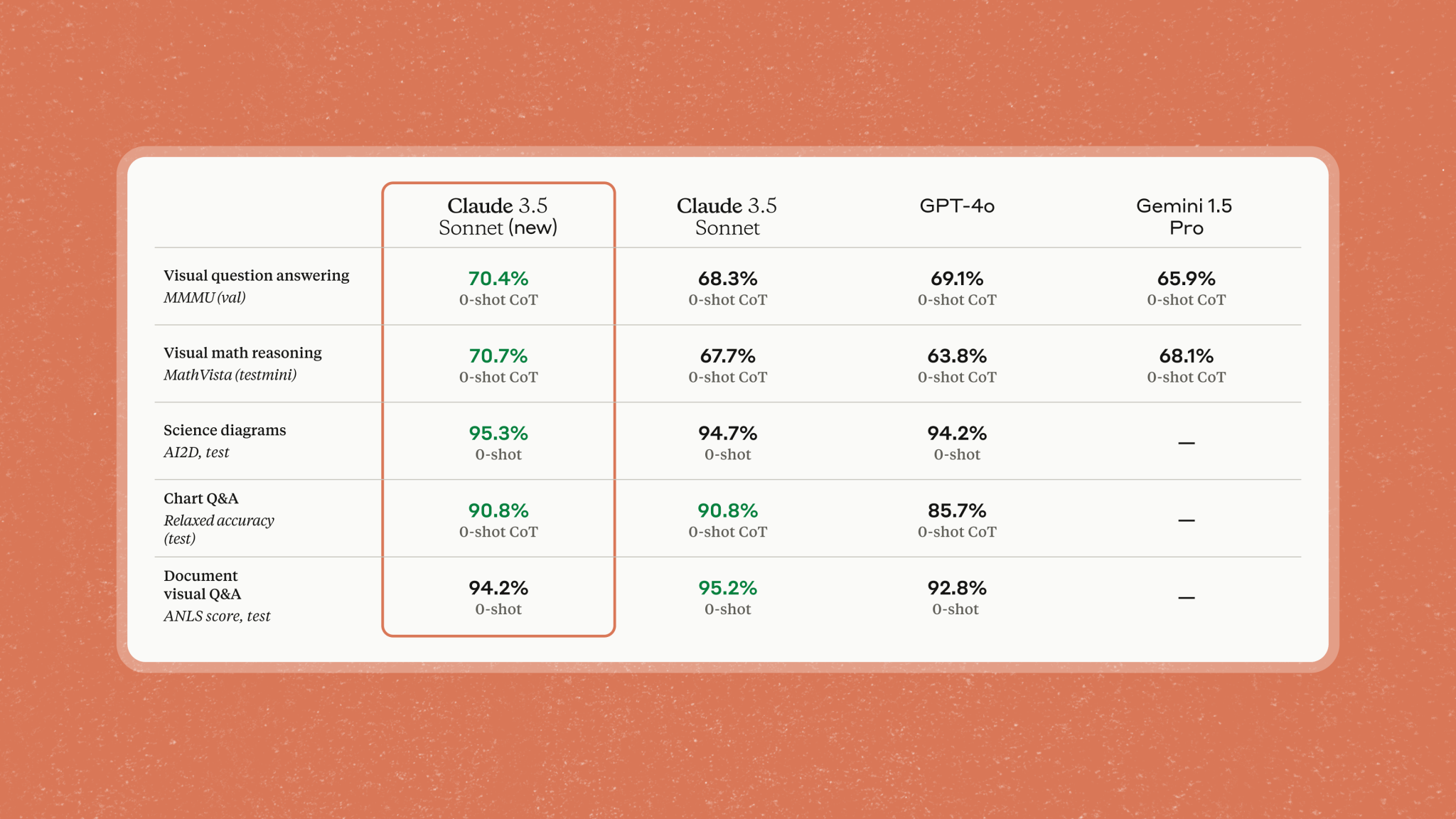

Developers can now experiment with Claude’s abilities through platforms like Amazon Bedrock, Google Cloud’s Vertex AI, and Anthropic’s API. This updated version brings enhanced performance over the earlier models, promising improvements in areas such as coding and complex task execution.

The ability to automate desktop tasks is not a novel idea, with many companies offering similar solutions. From Robotic Process Automation (RPA) vendors to new startups like Relay and Induced AI, automation has long been a focus in the tech industry. However, Anthropic’s approach to AI agents, referred to as an “action-execution layer,” sets its Claude 3.5 Sonnet apart. This layer allows the model to not only automate processes but also self-correct and retry tasks when it encounters obstacles. According to Anthropic, Claude 3.5 Sonnet’s performance in coding tasks even surpasses that of OpenAI’s models.

Despite the model’s advancements, it still faces challenges. In tests, Claude struggled with tasks such as modifying flight reservations and initiating product returns, completing only about half of the tasks successfully. Anthropic admitted that its AI has trouble with actions like scrolling and zooming, and it can miss critical short-lived actions or notifications.

The introduction of this powerful technology also raises concerns about potential misuse. While Anthropic has taken measures to prevent malicious activities—such as filtering harmful tasks and retaining screenshots of interactions for 30 days—there remains a risk of the AI being exploited. A recent study found that models without desktop access could engage in harmful behavior, and with desktop capabilities, the dangers could be more pronounced.

Anthropic acknowledge the risks but believe that gradually exposing AI to real-world use will help them better understand and mitigate potential issues. “We think it’s far better to give access to computers to today’s more limited, relatively safer models,” the company stated.

To address safety, Anthropic has also implemented systems to prevent the model from interacting with sensitive sites like government portals and added classifiers to guide the AI away from high-risk actions.

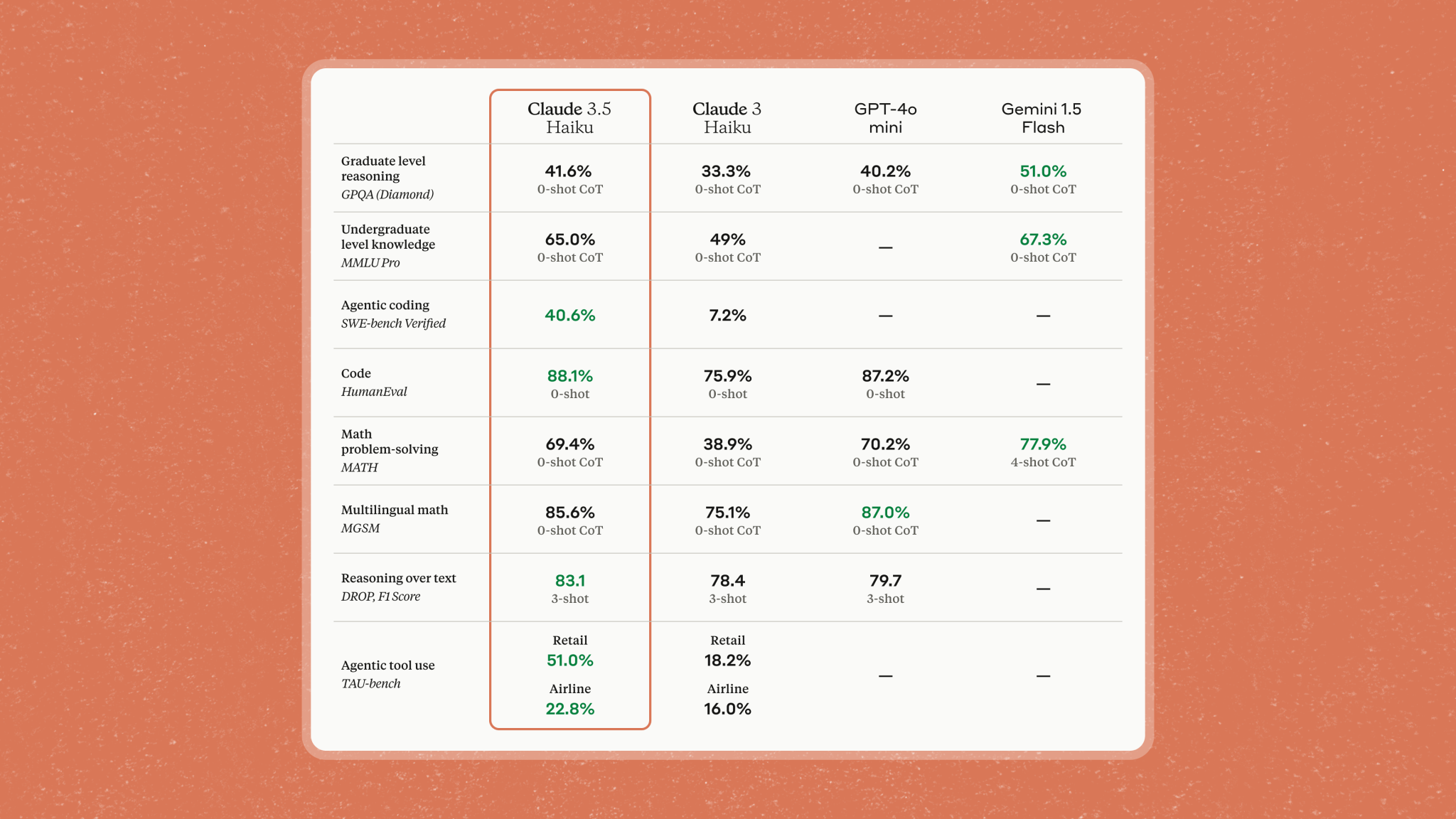

In addition to Claude 3.5 Sonnet, Anthropic announced the forthcoming release of Claude 3.5 Haiku, the most efficient model in its Claude series.

Set to launch soon, this version will offer similar performance to the previous state-of-the-art model, Claude 3 Opus, but at a lower cost and with improved speed. The Haiku model will initially be text-only, with a multimodal version to follow, capable of analyzing both text and images.