As AI continues to evolve, a major shift is underway in how machines learn and interact with the world. Historically, AI has relied on human knowledge and guidance to function, but a new generation of models, such as OpenAI’s o1, is beginning to break away from human-centric thinking. This shift echoes the breakthrough seen with DeepMind’s AlphaGo.

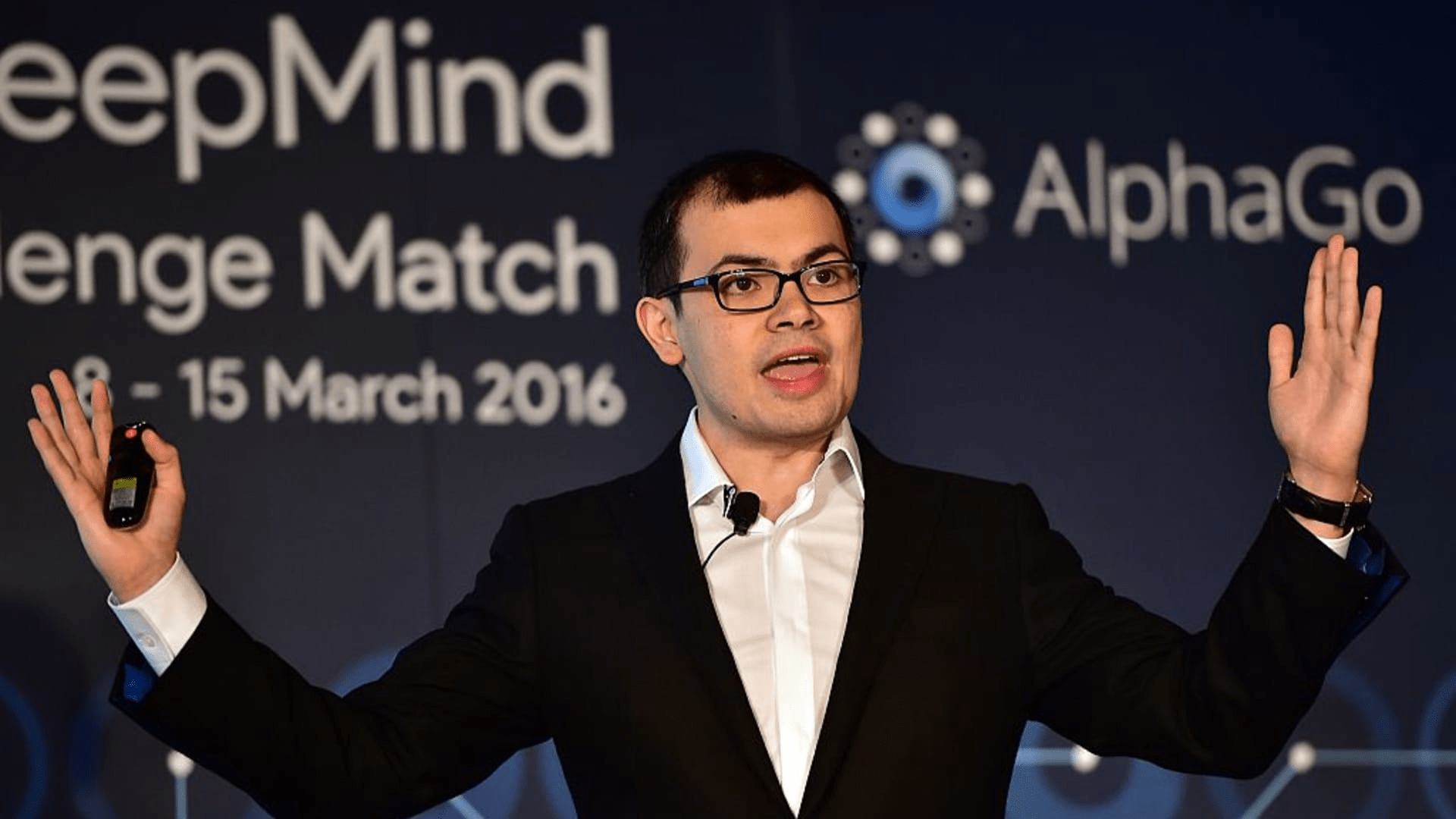

When DeepMind’s AlphaGo defeated world-champion Go player Lee Sedol in 2016, it wasn’t just another triumph of AI over humans. What made AlphaGo remarkable was its method of learning which is self-play reinforcement learning (RL). Without any guidance or knowledge of the game’s rules, AlphaGo relied solely on trial and error across millions of games, crafting its understanding of Go in ways that defied human strategies. It created moves that were strange to human players, but undeniably effective.

Following this success, DeepMind applied the same approach with AlphaZero in chess. Unlike previous models like IBM’s Deep Blue, which were trained on human knowledge and strategies, AlphaZero built its understanding by playing thousands of games. In a 100-match series, AlphaZero dominated Stockfish, one of the world’s most powerful chess engines, winning 28 games and drawing the rest.

The key to DeepMind’s dominance in games like Go and Chess lay in its abandonment of human-like thinking. Instead of emulating human decision-making, these AIs played to their strengths, using their immense processing power and cognitive capabilities to develop strategies from scratch.

You can tell the RL is done properly when the models cease to speak English in their chain of thought,” AI expert Andrej Karpathy said.

OpenAI’s new o1 model represents the next step in AI’s journey away from human guidance. Like AlphaGo, the o1 model is beginning to rely more on reinforcement learning and self-discovery, even in areas traditionally dominated by human language and reasoning. While previous language models, such as GPT-4, were trained on vast datasets filled with human knowledge, o1 has started to explore new methods of problem-solving.

The introduction of “thinking time” is one of o1’s standout features. During this time, the model reasons its way through a problem, generating a ‘chain of thought’ that mirrors human deliberation. However, unlike past models that followed pre-set reasoning patterns, o1 applies a trial-and-error approach. By experimenting with different steps, it learns to identify the most effective paths to correct answers.

This shift is crucial in topics where there is a clear right or wrong answer, such as coding or factual knowledge. While previous AI models could “hallucinate” or provide wrong answers confidently, o1 is designed to minimize such errors by applying reinforcement learning. This allows it to learn from its mistakes and build a more reliable understanding of the problems it encounters.

In areas where human expertise has set the standard, o1’s approach is already proving to be revolutionary. By generating millions of self-attempts, it’s starting to surpass even Ph. Ph.D.-level knowledge in some domains. As these models continue to evolve, they are beginning to step beyond human understanding, forging their path toward higher levels of intelligence.

The most intriguing prospect lies not in language models alone, but in AI systems that are embedded in physical bodies. As seen with companies like Tesla and Sanctuary AI, humanoid robots powered by reinforcement learning are starting to explore the physical world in the same trial-and-error manner that AlphaGo used to master Go. These robots will likely develop their understanding of reality, free from the limitations of human knowledge.

As these AI systems begin to interact with the physical world—touching, manipulating, and experimenting with objects they will build their own body of knowledge about how the universe works. They will not rely on human-designed scientific methods or theories. Instead, they will discover new principles and technologies through their interactions, potentially making breakthroughs that humans would never have conceived.