Future with self-driving cars is really exciting, but it is also equally complex and challenging. One problem that the developers have to tackle is how to make a decision when encountered with a morally complex situation, like which thing or person to hit in case of an unavoidable accident!

A research team at MIT has created the Moral Machine, which attempts to make people understand this conundrum by presenting a series of different traffic scenarios where a self-driving car has to make a choice between two perilous options.

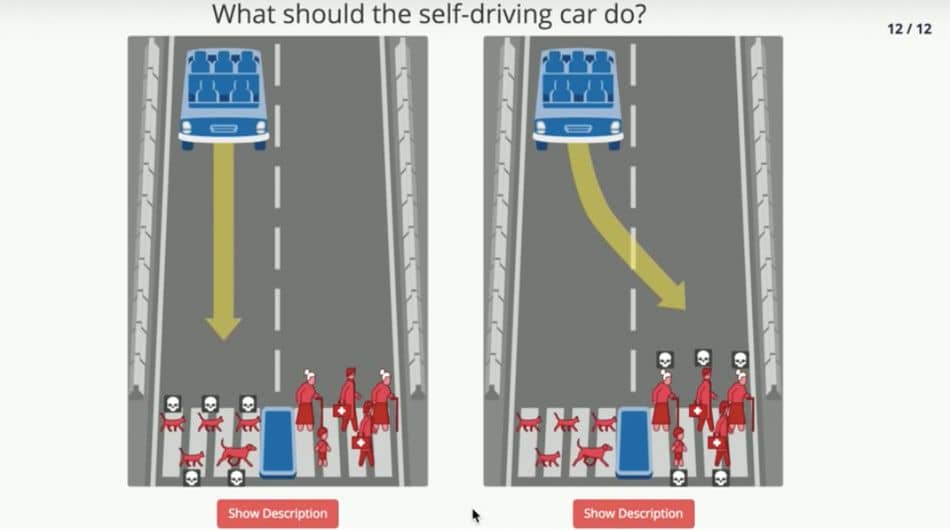

For example, the player is made to choose between a group of five jaywalking pedestrians or hitting a concrete divider killing two of your passengers? Or driving into a group of young pedestrians or elderly pedestrians? Choosing cuddly cats and dogs, or hitting a doctor, a man and an executive? Would you make the car hit a large group of homeless people obeying traffic laws or a small child jaywalking against the traffic light?

These scenarios will inevitably be forced upon the self-driving cars, and MIT’s experimental site gives us a taste of just how dark things can get. Making split second choices between a pregnant woman and small children is a tough ask in the game and even tougher for the developers who actually have to hard code the results.

At the end of the game, a list of results shows up in comparison to others who have played the game. So far the results conclude that people usually lean toward saving more female than male lives. They also prefer saving younger people before the elderly and hitting pets while saving human lives. The results between protecting passengers versus pedestrians were split roughly 50/50.

The site also allows you to design your own scenarios e.g., see cats vs. dogs.

According to MIT, the purpose of the experiment was to provide “a platform for 1) building a crowd-sourced picture of human opinion on how machines should make decisions when faced with moral dilemmas, and 2) crowd-sourcing assembly and discussion of potential scenarios of moral consequence.”

Although this is just a “game” for now, but if views like Lyft co-founder John Zimmer’s are right, companies looking to produce self-driving cars will soon have to deal with such morally complicated question!

Did you try the survey? What were your results? Comment below!