Alexander Reben from the University of Berkeley, California has created a robot that can decide on its own whether it must inflict pain on a human being or not. It was designed solely for this purpose using the AI segments of many humans that included their negative traits. He wanted to demonstrate the fact that AI is sooner or later going to do a lot of independent acts. If we aren’t careful about it, it can purposefully harm human beings.

Now before you say Terminator, let us show you how AI works. It is not like a human brain and may never achieve that level as a mere logic is driving it. Now for complex decision making, the robot needs to be aware of the surroundings. Once robot gets aware of its surroundings, it can study and observe human beings and make an understanding of it. Since robots don’t think like humans, they can see us in a different light and decide on utilization capability.

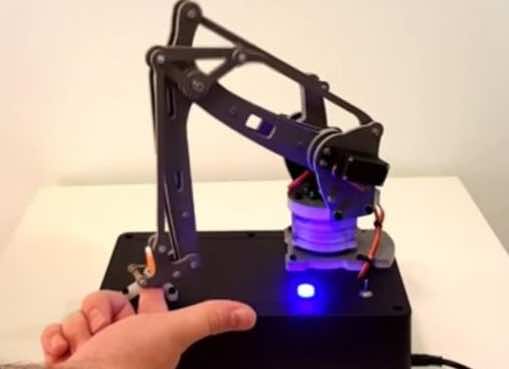

If given a chance, would the robot inflict pain on a human being if he is provided with the absolute freedom of choice? Here is the robot in action:

Now the robots can soon reach this level of picking up negative traits from humans and try to be a judgemental jerk. It is a very real downfall of the robots that poses a great threat to the fabric of the world ever since more and more military systems are being converted to robotics. Also, they are being given some form of freedom in the decision-making process. Now Ruben himself here has no idea when the robot will strike despite being the creator of the code.

“I don’t know who it will or will not hurt. It’s intriguing; it’s causing pain that’s not for a useful purpose – we are moving into an ethics question, robots that are specifically built to do things that are ethically dubious.”

The robot costs just $200 and no; it won’t go on retail. This is where the implementation of the basic robot laws of Isaac Asimov released in 1942 will come into effect. They are primarily designed to save human beings from harm. Google’s DeepMind project along with a few others has proposed a kill switch during robot interaction with people. But, the system needs to be designed in such a way that the robot can not override the kill switch that is designed to save human beings. Something that the I, Robot computer was able to do eventually. What do you think about it?