No one can forget the horrid events of January when a suicide video of a 12-year-old Katelyn Nicole Davis taking her life went viral on Facebook. The multi-billion platform was seen struggling to come up with a cogent response, and it couldn’t even control the spread of the video while appearing unsure of its terms of service’s stance on the content.

After a month of that horror show, Facebook CEO Mark Zuckerberg finally came up with his 6000-word global community manifesto which has demonstrated how Facebook will now take on a more parental role as it realizes and acknowledges its incredible influence over its two billion active user-base. Zuckerberg wrote:

The Facebook community is in a unique position to help prevent harm, assist during a crisis, or come together to rebuild afterwards. This is because of the amount of communication across our network, our ability to quickly reach people worldwide in an emergency, and the vast scale of people’s intrinsic goodness aggregated across our community.

Mainly related to suicide prevention, Facebook announced on Wednesday that they would use artificial intelligence to track and reach out to people who are prone to committing suicide.

“I wrote a letter on building global community a couple weeks ago, and one of the pillars of community I discussed was keeping people safe, “Zuckerberg wrote on his personal Facebook feed on Wednesday. “These tools are an example of how we can help keep each other safe.”

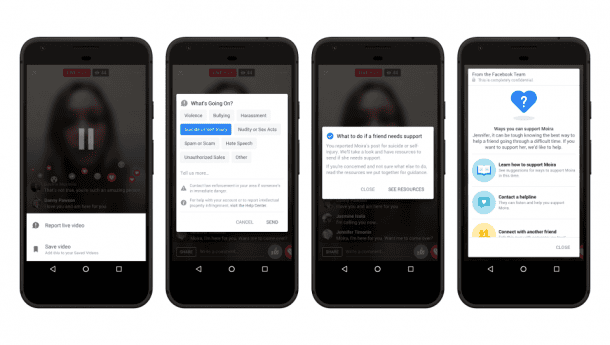

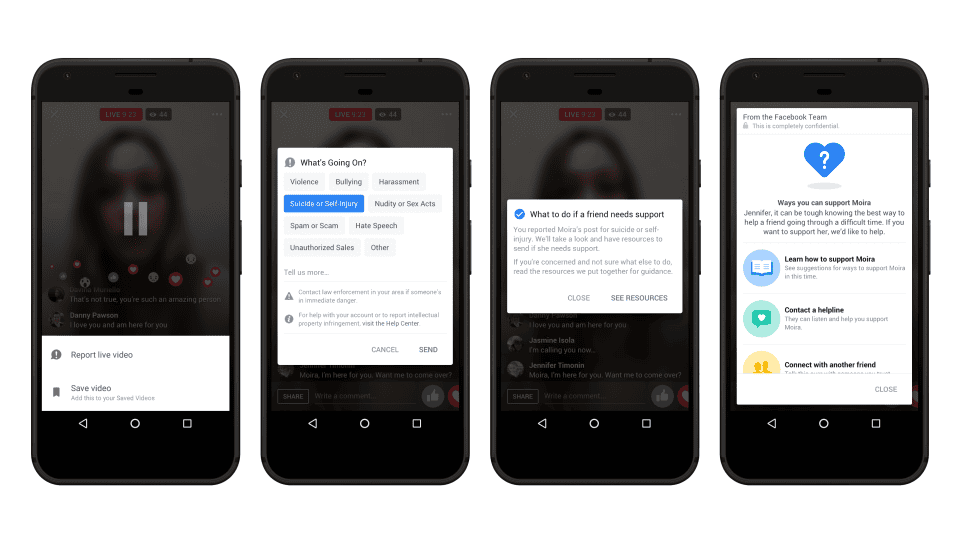

Facebook will employ a pattern recognition based on the user’s posts which were previously reported for suicide risk (a part of Facebook “Report This Post” feature for a decade). The AI tool will add to the reports and look for keywords in the post and comments from friends like “Are you okay?” and “I’m here to help,” which indicates how the person might be having suicidal thoughts.

The AI technology won’t auto-report the suicide risk to Facebook but instead will make reporting self-injury and suicide more prominent to the user next time he/she uses Facebook.

Dr. Daniel J. Reidenberg, Psy.D., FAPA, and Executive Director of Save.org, which is a nonprofit, nationwide suicide prevention organization said,

“This is really breaking new ground. Taking technology to the next level of saving people’s lives,”

Reidenberg adds that Save.org is ready to collaborate with Facebook’s new AI-based tools and help speed up the work to identify and reach the people at risk of self-harm,

“If people can be engaged with friends or family members on Facebook and they notice something that’s concerning or alarming and technology can pick up on some of those signals We can more rapidly intervene.”

The speed of reporting can make a world of difference and can,

“prevent a tragedy from happening,” he added

Facebook’s pattern recognition system will pick out the posts containing suicidal thoughts, to be reviewed by Facebook’s Community Team after which the potentially suicidal person will be extended suicide prevention resources. And in response to the Live.Me video tragedy, Facebook is also applying these suicide prevention tools to the Facebook Live posts.

“People watching a live video have the option to reach out to the person directly and to report the video to us,” said Facebook in a release.

Facebook COO Sheryl Sandberg wrote in a Facebook post,

“As a community, we cannot prevent every suicide, but we must do more to reach out to people who are struggling,” “As individuals, we can be alert for the signs in ourselves and in others and act immediately. Together, we can be there for people in distress.”

The emphasis on preventing self-harm brings our attention to the fact on how people in distress and depression need a tightly knitted circle of the emotional help of those who are watchful and compassionate. While AI might be able to play the watchful part, it is ultimately the people around a suicidal person who can make the difference between life and death.

We would like to know your thoughts on the introduction of this feature on Facebook. Comment below!

It’s against the law to commit suicide. When you think about it – how can they make that a law? And you were successful? lol. But attempted is another story. If the police find out you tried you are going to wish you did.