People judge others on how they react to different situations around them. But this judgment is not only limited to people as websites judge you too and the website that judges you the most is the Facebook.

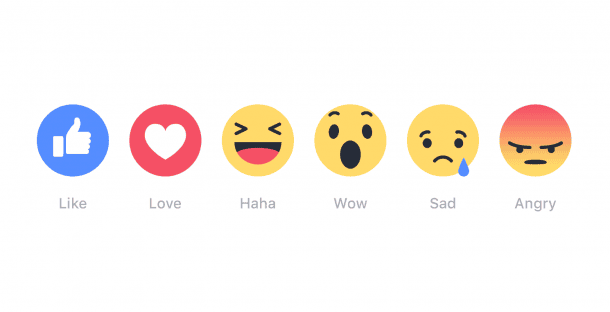

When the Facebook reaction buttons were introduced, most of us considered them as a way to make people more expressive and connect them better to friends. The reaction feature was added because not everything in the world is likable. Yes, that is true, but why does Facebook want to do this and how does it benefit them? How is the addition of more reactions rather than a simple like button more beneficial?

Nothing in the world is free, and neither is the Facebook. The website is using its users to generate data and continues to work towards enriching its data. Adding the reactions array in the place of a ‘like’ button is just a way of making their data richer. Facebook studies you based on your behavior and activities on the website and forms an idea of who you are as a person.

When you click a reaction button, you do not just show your friends your response to events. In fact, by showing love to someone or sympathizing at a sad event; you also tell Facebook what kind of posts you would rather see, what your likes and dislikes are, and what your interests or hobbies are. It helps to refine Facebook’s idea of you. When you click the ‘love’ reaction on the picture of a baby or that of a cat, Facebook will consider you as a child lover, or a parent or a pet lover. If you react as angry or sad to a post talking about Trump winning the election, Facebook will learn your political preferences. It will see how often and in what ways you react to sports-related posts or Hollywood-related posts and the website will then, tailor the content which it shows you including advertisements.

The capability of the Facebook algorithm to show you customized content depends on how much you react to posts. Facebook has been known to mass manipulate its users to study human behaviors. A study conducted in January 2012, titled “Experimental evidence of massive-scale emotional contagion through social networks” was published in PNAS Online. The newsfeed of about 689,003 users was manipulated by removing all positive or all negative posts to notice how it affected people’s moods. The study concluded that emotions are contagious. Individuals who saw positive posts made more positive expressions and the people who saw more negative posts seemed to post more negatively.

This study was conducted when there was only one possible reaction, the ‘like’ button. If Facebook were to carry out this study now, the new reactions would bring out much clearer results. Facebook can emotionally influence people. While it may not seem like much power yet, this influence affects every bit of your life immensely. An artist and a professor at the University of Illinois, Ben Grosser said,

“They already have so much power. To give an algorithm and a corporation access to which of the things on your feed you are most reactive to—it’s really useful information that tells them to not just tailor content to what they think you like, but they can push you.”

As long as you continue to use Facebook, the data scientists will study you. But as far as the reactions are concerned, there is one way of fooling the reaction algorithm. Ben Grosser has created a browser extension that he calls ‘Go Rando’, that randomizes your reactions on Facebook. Every time you click the reaction button, a random number generator will select some other reaction. If you pressed ‘Like,’ you may end up reacting as ‘sad.’ Fooling just one Facebook algorithm may not be enough to save you and your data, but the purpose of the project according to Grosser is to wake people up and question,

“Where does this data go? Who benefits from it? And who is made most vulnerable by it?”

It is high time that you start asking what data you are giving away to Facebook and how it can affect your life in the long run.

We would appreciate your feedback in the comments’ section below!